This post will help to provide historical context and demystify what's under the hood of Heads, PureBoot, and other tools to provide Trusted Boot.

I will not be presenting anything new in this article; I merely hope to provide a historical timeline and a curated list of resources.

Intro

The Librem Key cryptographically verifies the system's integrity and flashes red if it's detected tampering

I've always felt bad about two things:

- Because I run QubesOS, I usually disable "Secure Boot" on my laptop

- I travel a lot, and I don't have a good way to verify the integrity of my laptop (eg from an Evil Maid that gains physical access to my computer)

To address this, I have turned to Heads and PureBoot -- a collection of technologies including an open-source firmware/BIOS, TPM, and a USB security key that can cryptographically verify the integrity of the lowest firmware (and up the chain to the OS).

While Purism has written many articles about PureBoot and has some (minimal) documentation, I found they did a lot of hand waving without explaining how the technology works (what the hell is a "BIOS measurement"?). So I spent a great deal of time trying to research the technologies leveraged by PureBoot -- but it wasn't easy.

One of the biggest issues I've had in understanding these technologies is the controversial approach to Trusted Computing. To quote wikipedia:

"TC is controversial as the hardware is not only secured for its owner, but also secured against its owner."

(source)

Unfortunately, when learning about some infosec topics, it takes an enormous amount of information literacy and prerequisite knowledge to separate information sources that are designed merely for Enterprise from those that are adequate for Cypherpunks.

Enterprise Security vs Cypherpunk Security

Working as a cybersecurity consultant, I've fought (and sometimes failed) to prevent management from installing remote-access backdoor rootkits company-wide.

I can't even believe I have to say this, but I firmly believe that remote-access backdoors with the power to [a] execute arbitrary commands as root and [b] upload/download any files from a machine should never be deployed. Especially when it's provided by an untrustworthy MSP based in a country whose government has the legal authority to force them to do nasty things to their customers (and they have a history of doing it).

Julian Assange, a well-known Cypherpunk

But in an Enterprise environment, security doesn't mean the same thing as it does to Cypherpunks. Largely, Enterprises (or, more specifically, capitalist organizations) aren't so interested in protecting secrets. They're interested in protecting their capital. And capital can be protected by non-technical solutions (eg insurance). Because of this, technology targeting Enterprise Security may not meet your threat model's requirement. And because they're trying to sell you something, they may not clearly state which risk models are not protected-against. So you can easily spend a whole day reading well-funded security research, only to realize in the end that it's useless. Secure Boot and AMT are an examples of this.

Enterprise Security is more aligned with Authoritarianism -- an ideology that champions hierarchy and power given to a centralized authority.

Cypherpunk Security, on the other hand, is more aligned with Anarchism -- an ideology that champions individual autonomy.

While Secure Boot provides weak protections and strips power away from the user, Heads and PureBoot provide strong protections and empowers the user. But they do so while still using the same hardware technology that's traditionally used in an Enterprise environment -- which was designed with the intention to strip the user of their autonomy.

With this article, I hope to provide the resources to help to understand how PureBoot was able to leverage tools like TPMs yet also cover the Cypherpunk's threat model.

Assumptions & Scope

The Purism Librem 14 ships with Heads pre-installed

We assume the reader has basic understanding of cryptographic authentication, but not Trusted Computing.

This article won't show how to install Heads on your system.

We're also not going to talk about hardware. You should probably buy a device that is known to work with Heads. Or make your life easy by buying one that already ships with Heads pre-installed.

Timeline

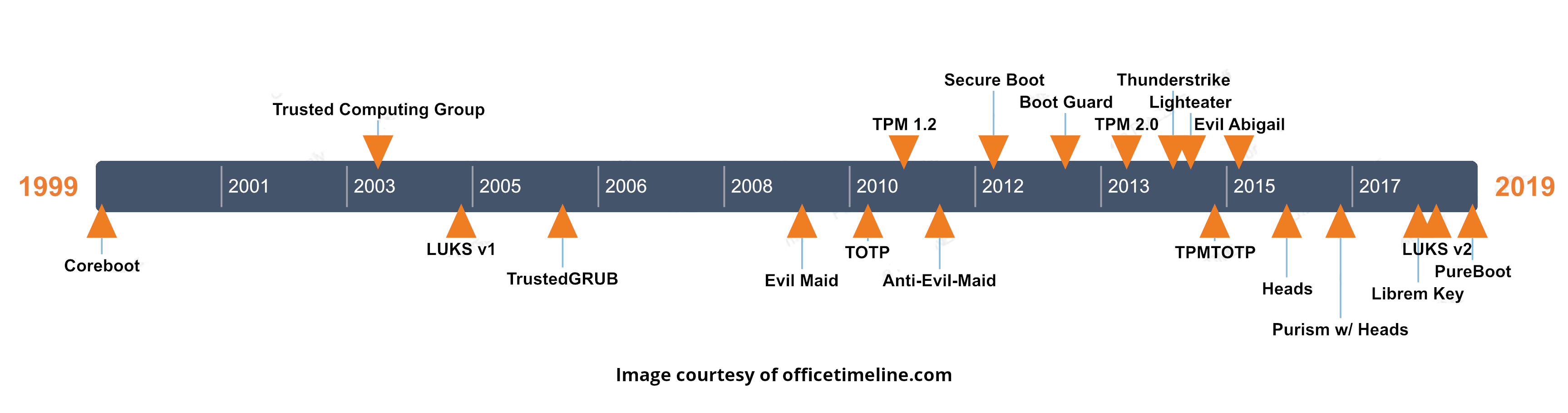

For context, I'll provide a chronological timeline of important developments on the road to Trusted Boot that was appropriate for Cypherpunk Security.

While Trusted Computing goes back to 1999, much of the technology for Trusted Boot was built over the next 20 years, closer to 2019.

| Date | Event |

| 1999 | Coreboot |

| 2005-01 | LUKS v1 |

| 2006-06 | TrustedGRUB |

| 2009-10 | Evil Maid |

| 2010-09 | TOTP |

| 2011-03 | TPM 1.2 |

| 2011-09 | Anti Evil Maid |

| 2012-06 | Secure Boot |

| 2013-06 | Boot Guard |

| 2014-04 | TPM 2.0 |

| 2014-12 | Thunderstrike |

| 2015-03 | Lighteater |

| 2015-07 | TPMTOTP |

| 2015-11 | Evil Abigail |

| 2016-07 | Heads |

| 2017-04 | Purism w/ Heads |

| 2018-05 | Librem Key |

| 2018-08 | LUKS v2 |

| 2019-02 | PureBoot |

Technologies

This section will briefly describe the following technologies:

- Coreboot

- Full Disk Encryption

- Secure Boot

- The TPM

- Intel Boot Guard

- (Anti) Evil Maid

- Beyond Anti Evil Maid (TPMTOTP)

- Heads

- PureBoot

Coreboot

Coreboot, the open-source firmware

Trusted Boot follows as a chain of trust. As you climb up the stack from the firmware to the OS, the previous link in the chain verifies the next link in the chain before it executes it.

It's super important that the very first link in the chain (the root of trust that is the first bit of code executed by the processor) is trustworthy. This root-of-trust firmware is your BIOS (aka "UEFI Firmware" on modern systems).

Unfortunately, most devices on the market used closed-source BIOS that can't be audited or patched by the community. This is important, because if an attacker compromises your firmware, their malware can persist even if you reinstall the OS or replace the HDD. Historically, this has been a problem.

Fortunately, in 1999, a group of Linux hackers at LANL wanted to fix this; they published "Linux BIOS". In 2008, the project was renamed to Coreboot.

Full Disk Encryption

LUKS, the dominant Disk Encryption for Linux

Of course, it's assumed that you're using Full Disk Encryption (eg LUKS or veracrypt).

At some point you'll type a password to decrypt your disk. But how do you know that someone hasn't swapped your laptop with one that looks identical -- but this malicious laptop is merely going to relay your keystrokes to an attacker that's stolen your real laptop? You need a fast way for your computer to authenticate itself to you before you're prompted to type your FDE password.

Secure Boot

Secure Boot was a highly controversial technology released in 2012 as part of UEFI 2.3.1.

[Heads uses a] user provided GPG signing key (preferably in an external hardware token). This is a significant change from UEFI [Secure Boot], where the vendor and OEMs control the keys. It is important that updates and changes are signed by the user who installs them, not by random OEMs who may not have the best key management policies. We know that stuxnet used stolen kernel driver certificates, which were trusted by the OS.

-Trammell Hudson, creator of Heads

source

Due to its design flaws, Secure Boot was widely rejected by many in the security community, and many Operating Systems (QubesOS included) do not support it.

For cypherpunks, Heads is a more suitable solution for Trusted Boot.

The TPM

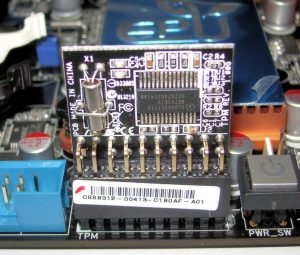

TPM installed on an Asus motherboard (source)

One of the main reasons I wrote this article was because I found it very difficult to find information about how the TPM plays into Heads. If you read the Purism documentation or google it, mostly what you find is that the TPM will only reveal its secret if the "measurements" match. But what the hell are these "measurements"?

First, the reason why most docs vaguely say "measurements" is because that's the word used in the official TPM specification (and, more generally, by the Trusted Computing Group), and that spec intentionally doesn't define how such "measurements" are used.

A "measurement" is a cryptographic hash of a blob (of code and/or read-only data)

And "measurements" are a set of cryptographic hashes that are stored in a subset of the TPM's PCRs.

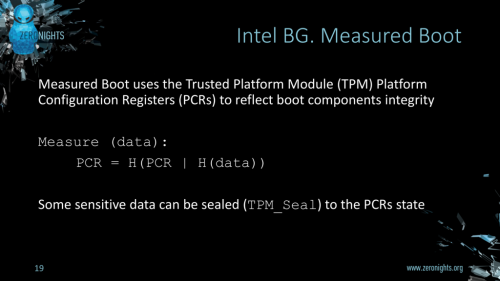

In the context of Trusted Boot with a TPM, a "measurement" is a cryptographic hash of a blob (of code and/or read-only data). And "measurements" are a set of cryptographic hashes that are stored in a subset of the TPM's PCRs (Platform Configuration Registers).

PCRs (as defined in the TPM spec) are a type of memory register that is volatile (ie its values are lost on reboot). It's also special in that you cannot actually write data to a PCR. To change the value of a PCR, you must use the extend() operation, which is defined (Sec 16.1) as follows:

PCRnew = Hash( PCRold + value )

In TPM v1.2, the hash algorithm was SHA-1. In TPM v2, SHA-256 can be used.

If you're using Heads, then the actual "measurements" and which PCRs they're extend()ed to are listed here:

0: Nothing for the moment (Populated by binary blobs where applicable for SRTM)

1: Nothing for the moment

2: coreboot’s Boot block, ROM stage, RAM stage, Payload (Heads linux kernel and initrd)

3: Nothing for the moment

4: Boot mode (0 during /init, then recovery or normal-boot)

5: Heads Linux kernel modules

6: Drive LUKS headers

7: Heads user-specific files stored in CBFS (config.user, GPG keyring, etc).

(16): Used for TPM futurecalc of LUKS header when setting up a TPM disk encryption key

The TPM 1.2 spec also defines a number of important storage operations (Sec 10), including

- TPM_Seal()

- TPM_Unseal()

These two functions are used to store (seal) and retrieve previously-stored (unseal) secrets into and out-of the TPM, respectively.

At the time that a secret is sealed into the TPM, you tell the TPM to note a subset of PCRs. When it comes time to unseal the secret, the TPM will only do so if the value of these PCRs exactly match what they were at the time the secret was sealed.

Understanding these three TPM functions is critical to understanding Trusted Boot. If you're struggling to wrap your head around these three functions, I recommend watching the TPM explanations in the 10-20 minute sections of these two videos:

- Attacking Intel TXT (start @ 1m 03s - 12m 15s)

- Beyond Anti Evil Maid (start @ 6m 20s - 22m 13s)

|

|

|

Joanna Rutkowska describes a TPM, PCRs, extend, seal/unseal, and TC "measurements" |

Of course, anyone with physical access to your machine could force the TPM to unseal a secret if they could read the value of the blobs being "measured" and called the PCR extend() before calling unseal(). This is important to keep in mind because some tools that leverage the TPM will "measure" user input (eg a password), but many just "measure" blobs stored on the machine in plaintext.

These three functions of a TPM (extend(), seal(), unseal()) are general enough to be useful to Cypherpunk, and we'll see below how they were utilized by tools (eg Heads) to verify the integrity of a Trusted Boot chain.

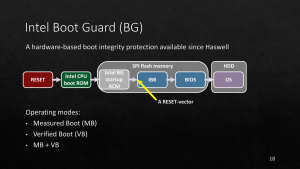

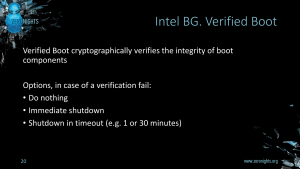

Intel Boot Guard

BG Measured Boot (Ermolov)

Intel Boot Guard (first released in June 2013) aimed to provide a way to verify the authenticity of the system's firmware. It can do this in two ways:

- Verified Boot (for Enterprises)

- Measured Boot (for Cypherpunks)

Boot Guard's Verified Boot mode checks that the firmware is signed by a key managed by the OEM. While this may be fine for Enterprises, it means that [a] if the vendor doesn't follow good key management practices, you could be at risk and [b] it literally prevents you from booting your computer if you modify the firmware yourself. This means that processors with Verified Boot cannot use Coreboot and cannot use Heads and should not be trusted.

On the other hand, Intel Boot Guard's Measured Boot is actually good. Rather than preventing you from booting the computer, the Measured Boot mode just takes a cryptographic hash of the firmware and stores that in a PCR on the TPM so that subsequent steps (eg Heads) can check it during boot.

Unfortunately, you cannot toggle between Verified Boot and Measured Boot. They're permanently "set" by the OEM using Field Programmable Fuses.

(Anti) Evil Maid

The "Evil Maid" attack was coined by Joanna Rutkowska in 2009.

The "Evil Maid Attack" is one where the attacker (a maid of a hotel or cleaning staff of your office) gains physical access to your device when "cleaning" your hotel room or office while you're away. "Anti-Evil Maid" (or "Trusted Boot") are mitigations of this attack that can alert us if the device's integrity has been modified in any way by an "Evil Maid".

Rutkowska proposed a solution to the Evil Maid attack that involved the user sealing a secret (eg a phrase or photo) into the TPM. Because of the TPM's design, it will only unseal (and display) the secret to the user if the "measurements" were valid (ie if the set of defined PCR registers had values exactly matching their values at the time the secret was sealed). And to to protect the secret, the PCR registers used to unseal it should be wiped after the user views the secret.

All the attacker needs to do is to sneak into the user’s hotel room and boot the laptop from the Evil Maid USB Stick. After some 1-2 minutes, the target laptop’s gets infected with Evil Maid Sniffer that will record the disk encryption passphrase when the user enters it next time. As any smart user might have guessed already, this part is ideally suited to be performed by hotel maids, or people pretending to be them.-Joanna Rutkowska, coined "Evil Maid" attack

source

She suggested that the secret stored in the TPM be a photo taken by the user, which is a form of Knowledge-Based Authentication that reminds me of a popular (yet unfortunately uncommon) anti-phishing tech known as SiteKey

But there's a problem with Rutkowska's Anti-Evil Maid solution: what if the attacker just boots your device, gets the secret, and then replaces your device with a malicious one that just outputs the secret and then prompts you for your password -- simulating your existing boot process, but relaying your password to the attacker?

An improvement to mitigate this is to encrypt the secret phrase (or photo) and always carry it with you (eg on a USB drive on your keyring) and not store the secret on your computer. Rather, the TPM stores the symmetric decryption key and that's only unsealed if the system is trustworthy (ie if the set of defined PCR registers had values exactly matching their values at the time the encryption key was sealed).

Beyond Anti Evil Maid (TPMTOTP)

In 2015, (several years after Rutkowska's Anti-Evil Maid solution was published), Matthew Garrett suggested solving the "displaying the shared secret on boot" problem with an OTP. Specifically, by using TOTP.

Exposing a static secret [on boot] is a little bit sub-optimal. Ideally, we would not have a static secret. We would think of some sort of dynamic exposure -- something that changes over time. Something where merely having possession of a single instance of the secret being presented does not allow you to present the correct secret in the future. We want a shared secret with some sort of dynamic component..This is basically the design goal of TOTP -- the protocol that's used for the majority of 2FA.

-Matthew Garrett, creator of TPMTOTP

source

One problem with this is that the TPM doesn't have a clock, which means the TOTP secret must be (temporarily) stored on the system's RAM in order to produce the 6-digit OTP code. When presenting Beyond Evil Maid at 32c3 in 2015, Garrett also pointed-out that Intel Management Engine (with Direct Memory Access) was another huge security risk that could undermine Trusted Boot (or your whole OS post-boot).

Another vulnerability of Garrett's proposed TPMTOTP solution is a relay attack: an attacker could steal your laptop and leave behind an identical-looking malicious "relay" laptop. On booting the malicious relay laptop, it communicates out to your real laptop -- which relays the 6-digit OTP code down to the malicious laptop. You verify that the 6-digit OTP is correct and type your FDE decryption password -- which is relayed out to the attacker with your real laptop.

|

|

|

Matthew Garrett on TPMs, TOTP, and mitigating Evil Maids |

Heads

One year after Garrett presented TPMTOTP, Trammell Hudson forked the idea to create Heads. Hudson's version shrank the codebase to fit inside the SPI flash ROM, so the TOTP auth occurs sooner in the boot chain and reduces the attack surface.

Unlike (most) Enterprise Security solutions (eg "Secure" Boot), Heads actually has great documentation on the threat models that are and are not covered by their solution.

I'm a huge fan of the TPM...I know in the Free Software Community it's been largely unwelcome...because of the way it's been used for DRM. Since we control the TPM from the first instruction in the boot block, we're able to use it in ways that we want to, so we don't have to enable DRM, but we can use it to protect our secrets.

-Trammell Hudson, creator of Heads

source

Heads uses a lot of auth keys, and their docs clearly list each of the (private) keys Heads uses and how they're protected.

|

|

|

Trammell Hudson presents Heads at 33c3 |

Another feature added by Heads is the ability to set a TPM Disk Unlock Key. This allows LUKS users to store their symmetrically-encrypted FDE master key sealed inside the TPM. With this, you can only extract the master encryption key from the TPM if all the PCR registers match their expected values and the user enters the correct password. An important benefit here is that, because the key is sealed inside the TPM, you can do clever things like hardware rate limiting or have the key securely wiped after X failed attempts.

PureBoot

A few years later, the company Purism was actively contributing to improve the usability of Heads, and they came out with PureBoot in 2019.

PureBoot isn't a software project or a specific tool. It's a marketing term for the collection of the following technologies:

Neutralized andDisabled IME- Coreboot

- A TPM

- Heads

- The Librem Key

The addition of the Librem Key adds the following notable improvements to Heads:

- Usability

- 2FA Disk Decryption

- PGP Smartcard

While displaying a 6-digit token on-boot is a brilliant way to verify boot integrity, it's not practical because most people aren't actually going to pull out their phone and verify the OTP code.

The Librem Key (which is a modified version of the Nitrokey Pro 2) simplifies this with an LED that flashes green if everything is OK or red if not.

|

|

|

PureBoot Demo with the Librem Key |

Another benefit of the Librem Key is that you can generate PGP keys on it that cannot be extracted. This allows you to use 2FA decryption of your LUKS-encrypted drive (though, not easily). And, of course, if the user always inserts their librem key to decrypt their disk at-boot, then they'll always be able to see the integrity LED at-boot.

And you can use the PGP key stored on your Librem Key to sign all the files in /boot/ (to detect tampering of your linux kernel, for example).

Limitations & Improvements

Processor, TPM Trust

Trusted Boot only works if you can trust your processor and your TPM.

Most processors (since 2013) include a remote-access backdoor that lives on a distinct microprocessor that can turn itself on (even when your machine is off), install software, and exfiltrate data from your system. Fortunately, this can be disabled and mostly neutralized (Update: IME neutralization no longer works on newer processors).

Relay attack

While the TOTP solution cleverly solves the replay attack, it's still vulnerable to a relay attack.

An attacker could steal your laptop and leave behind an identical-looking malicious laptop. When you (unknowingly) boot the malicious relay laptop, it communicates out to your real laptop -- which relays the 6-digit OTP code down to the malicious laptop. You verify that the 6-digit OTP is correct and type your FDE decryption password -- which is relayed out to the attacker with your real laptop.

Security vs Convenience

While using a Hardware Security Key (eg Librem Key) is more convenient than using your phone's TOTP app, it's also less secure.

If someone gains access to both your laptop and your Librem Key, then they can change your firmware, reset your TPM, reset your Librem Key, and sync them all back up with each-other. This attack can happen, for example, [a] during the ~1/3 daily hours where you're not awake or [b] when you're going through a security screening and an authority demands that you pass your laptop and your keyring through a machine out of your sight (until we have metal-free hardware security keys or, uhh, subcutaneous OpenPGP smartcards?).

The consequence is that the "flashing green LED" on-boot can give a false sense of security.

This sort of tampering can still be detected by double-checking the TOTP secret against your phone on-boot (assuming your phone is protected with a strong passphrase), but there inlies the tradeoff between Security & Convenience.

TOTP Secret

If the TOTP secret is stolen by an adversary, then they could build a malicious replica of your device that simulates your authenticated boot process correctly (outputting a valid 6-digit OTP code) before prompting you for (and stealing) your password.

Because [a] the TOTP secret needs to be combined with the current time to produce an OTP code and [b] the TPM doesn't have an onboard clock, the TOTP secret is designed to be unsealed (as opposed, for example, an RSA key generated inside the TPM which cannot be exported from the TPM). This design means that if someone has physical access to the TPM, then they can manually extend the PCR values with to a known-good state to unseal/extract the TOTP secret.

Additionally, an attacker may also be able to steal the TOTP secret if you stored it on your phone; handsets are generally less secure devices since most people don't use strong passphrases on their mobile devices.

It shouldn't be possible to steal the TOTP (or HOTP) secret from a hardware security key.

Suspend Attack

Heads does not verify the integrity of your system when unsuspending your computer.

If you're using your laptop in an insecure place or moving it between secure spaces, it's best-practice to shutdown your computer -- not suspend.

For example: how would you know that your xscreensaver password prompt isn't a malicious simulation that'll relay your unlock password to your attacker?

To mitigate this risk:

- Shutdown your device (not suspend) when moving

- Use a distinct passphrase for [a] booting (FDE decryption) and [b] screen unlock

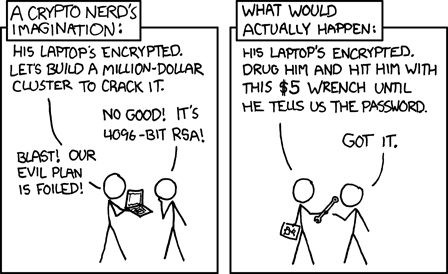

Duress Password

A duress password can mitigate Rubber Hose Cryptanalysis (source: xkcd)

One thing I'd really like to see Heads include is a duress password.

Especially for users who store their FDE master key in the TPM, it would be great if a user could type a special string into the boot password prompt that would cause the TPM to reset, thereby rendering the entire disks' contents permanently useless (eg if being forced to enter a password against your will).

Nail Polish

One of the best ways to make your device tamper-evident is very low-tech: (rainbow) glitter nail polish.

|

|

|

Glitter Nail Polish and PureBoot |

Further Reading

- Intel x86 considered Harmful

- (Android) Verified Boot (dm-verity)

- Linux Boot (NERF)

- Trench Boot

- Coreboot Measured Boot Documentation

Related Posts

Hi, I’m Michael Altfield. I write articles about opsec, privacy, and devops ➡

This is a really useful blog, many thanks for it - it answers a lot of questions I had on the process. Have you tried using PureBoot & Librem Key on something other than the Librem14 with PureOS? I am interesting in building a secure thin client receiver to keep the local data to a minimum. I was thinking potentially a framework laptop (so I can take pictures of the internals and potentially source my own parts) as well as the nail polish approach. Would like to investigate this with one of the thin client receiver OS's like 10zig or iGel. I'm conscious though our users might get a bit confused about with re-sign files when the OS updates etc, but I understand this could be optional?

Should have added this would only be if the firmware gets ported and taken up by Heads or someone, I know frame.work uses opensource firmware also - but for now I am interested in what can be done to work on the user experience while keeping security as high as possible.

Hi Michael that is a very good write-up. Open-source firmware, privacy, and security community lack people who can, in an approachable way, explain so complex topics.

Since I'm the founder of the company which deals with problems you're trying to explain every day, I decided to leave some comments and clarifications.

Secure Boot - the name alone is very confusing; what I'm trying to explain every time what we typically mean is UEFI Secure Boot, defined in UEFI Specifications, to make this technology work,

we also need others who continue the chain of trust from the beginning of the boot process (UEFI Secure Boot covers DXE and further phases of the boot process up to hand over to OS). What

is inherently broken in UEFI Secure Boot is explained by Trammel as "random OEMs who may not have the best key management policies". UEFI and all its features were created for OEM/ODM with the mind

of protecting the value chain Intel developed over the years in the computer industry. The key misconception is that we really can use UEFI Secure Boot technology for our benefit and stick

to cypherpunks' ideals. All we have here is cryptography, and if it is correctly implemented, we can use it, especially when dealing with open-source firmware distribution like (Heas, PureBoot, or Dasharo). The problem is that OEM/ODMs do not make things simpler by completely breaking UEFI Secure Boot, but the fact that most companies have no means of correctly exposing this feature

to the end user doesn't mean it is useless. In an ideal world, we all should be able to provision our own keys in UEFI databases and keep the chain of trust tied to our keys.

Intel Boot Guard - fusing the device to your own keys also can be completely fine (Enterprise security in Cypherpunks hands). The key problem here is access to tools that can do that and help

maintain. Some Cypherpunks threat models can justify that. Of course, the measured boot would always be a more flexible and scalable solution e.g. verified boot cause problems with ownership

transfer. The measured boot also gives you the ability to create your own custom policy, what you trust when, and what operations you want to perform under a given trust level. The missing piece

of the measured boot is attestation and the long chain, which delivers measurements (aka Trusted Computing Base - TCB). TCB can be minimized by e.g. D-RTM technology.

The key security issue of Heads and similar solutions is that measurement chains are as good as the first measurement, so the code that makes the first measurement has to be somehow protected.

Protection of that code can be done in many ways (Intel Boot Guard, read-only SPI region), but without it, someone can replace the initial code that makes the first measurement and just repeat

all measurements with the correct one despite the other code booting.

ME concerns us as well as an ever-growing number of peripheral microcontrollers (e.g. USB-PD) with firmware that can compromise the system, but such type of technology is ever-present

(e.g. Secret life of SIM card) so we have to be aware of it considering our threat model. Ultimate protection would cost almost an infinite amount of money, so everything is about the threat model,

the value you try to protect, and the expected value you get from investing a given amount of money in protection mechanisms. It all boils down to "who is after you"?

D-RTM can help mitigate relay attacks while adding to the dynamically measured value of some unique id of the device, which is hard (or impossible?) to change.

Suspend attacks vector change dramatically in light of modern sleep states like S0ix, with which Xen has a lot of problems. Sleep states landscape will evolve even further with Arm-based laptops.

One more time, you did great work educating a lot of people about boot problems and ways those are resolved today. It would be great to work together to improve awareness even more and lead

open-source firmware ecosystem into a sustainable, privacy-centric, secure, and trustworthy environment.

Thank you!

Nit: there are probably some typos since once there are cyberpunks, cypherpunks, and cyperpunks.

Thanks for the thorough comment. I've fixed the typos 🙂

Thanks for the great article!

(Is there a typo in "Another vulnerability of Garrett's proposed TPMTOTP solution is a replay attack"? Shouldn't it be "a relay attack"?)

Thank you! Good eye, fixed 🙂

Hello Michael, thank you so much for this blog (I will reference it to others going forward)! It has cleared up several questions and technical head-scratching moments I have had regarding coreboot/HEADS/TPM etc.

One question I still have is around the AEM piece. I think something like HEADS is a must if you travel frequently and care about security. But the other angle is a desktop at home...lets assume no physical access except for the owner is possible. In this case AEM like HEADS would be overkill no? The biggest threat then would be a Xen breakout 0day or something of that nature or other network based attack right?

But thinking about it, to place persistence an adversary may modify /boot which would get picked up by HEADS so I guess I just go back and forth with if HEADS is worth it for a desktop.

Like with anything in security if the end user is more negatively affected by it then any potential bad actor. Then you know the end user will indeed reject it no matter the benefit. Not everyone is security at all costs, and Secure Boot is a perfect example of trying to satisfy consumers doubts on Windows security specifically. Even Windows 11 just goes a step further with Secure Boot and TPM 2.0 both of which were options in Windows 10 and 8. But if you know anyone using any other OS but Windows you are probably avoiding Secure Boot. I know myself when evaluating Linux desktop distributions that I normally disable Secure Boot to avoid any issues. Some like Ubuntu do offer support for Secure Boot but only sort of. I look at Chrome OS as being obsessed with isolating through multiple partitions that can protect the integrity of the OS. Trouble with Chrome OS implementation is you cannot dual boot another OS. This is a great article on security, and I am glad to have stumbled across it. Unfortunately, consumers love and hate security. They love it when it protects but hate it with its inconveniences.